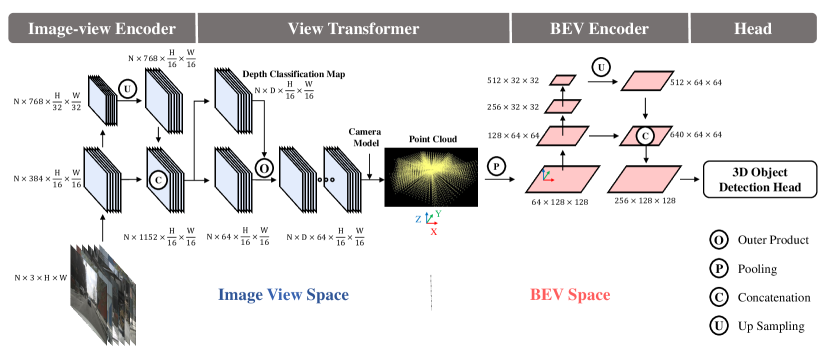

| Input Type |

3D voxels from LiDAR point cloud |

Point clouds projected onto pillars |

BEV projected images or LiDAR points |

Multi-modal: LiDAR + camera + radar |

| Representation |

3D voxel grid |

2D pseudo-image (pillar features) |

2D BEV feature map |

2D BEV feature map with fused modalities |

| Computational Cost |

High (3D convolutions) |

Moderate (2D convolutions) |

Low to moderate (2D CNN) |

High (fusion + multi-modal processing) |

| Spatial Resolution |

Good 3D spatial accuracy |

Limited by pillar size |

Good horizontal resolution, limited vertical |

High overall, retains vertical + semantic info |

| Detection Performance |

Strong for dense point clouds |

Efficient and competitive |

Efficient, good for overhead view |

Best accuracy, robust to occlusion |

| Use Cases |

3D object detection in LiDAR |

Real-time LiDAR detection |

Autonomous driving, traffic analysis |

Autonomous driving, robust multi-sensor perception |

| Pros |

Accurate 3D localization |

Fast, lightweight |

Easy to integrate with planning |

Robust, handles occlusion and multi-modal info |

| Cons |

Computationally heavy |

Loss of fine 3D detail |

Limited vertical info |

More complex, high compute requirements |